|

||||||||||||

|

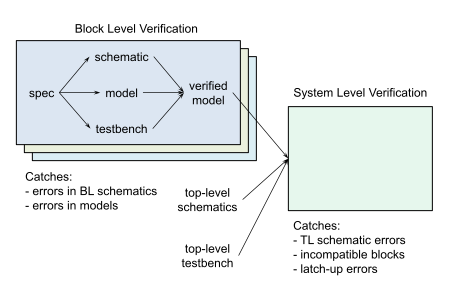

The approach to Yosemite’s Half Dome There are two fundamental steps in an analog verification process. In the first, the analog portion of the design is partitioned into manageable blocks that are modeled. These models enable practical higher-level simulations. The second step is to verify the higher-level system with these simulations. The higher-level system may be the mixed-signal portion of the design, or it may be large portions of that design, or it may be the entire chip. Regardless, it is the creation of validated and efficient models in the first step that makes the second step possible. Block-Level Modeling

It’s rare that we make mistakes in digital decode logic in the analog. Fortunately, the testbench found it.

Analog designer of a PGA

In digital design, one starts with RTL, which is then synthesized to get the implementation. The tools assure that the RTL and the implementation are functionally equivalent. The verification challenge is not that the implementation might be functionally different from the RTL, rather it is that the behavior of the RTL can be quite complicated. Thus, from a functional perspective, the RTL represents an executable specification for the design, and the challenge is to verify that the specification is correct. In analog design, the functional behavior is considerably simpler, but there is no equivalent to RTL. Rather than a spec that can be simulated, the specs take the form of text documents or spreadsheets. Here the challenge is not so much the complexity of the specification, but rather determining if what was implemented matches the specification.

The testbenches found issues we forgot to check for, such as one where the bias remained on when the block was supposed to be in sleep mode.

Analog designer of an ADC

To address this issue, we introduce the concept of an executable specification for analog blocks: the MiM spec. It is not possible to synthesize the circuit from this spec. Instead, our product, MiM, generates a model and a testbench from the spec. The testbench is fully autonomous and exhaustive. The testbench compares the behavior of the model with that of the block-level (BL) schematic. Once all tests pass, the model, the schematic, and the spec are all consistent.

If the testbench had not caught that our schematic used unsigned encoding instead of signed in the model, the RTL would have been changed to the encoding in the model and we would have introduced an error in the RTL.

Engineering manager describing a PLL system

The validation of the model against the schematic is a crucial step that is often skipped or performed in a perfunctory manner. However, this is dangerous. It is not uncommon for working designs to be broken by changes made to accommodate an incorrect model that was never validated. Verifying with models that have not been validated is not verification, it is testing. The difference between testing and verification is that testing is opportunistic whereas verification is systematic. With the former, you run some tests in the hope you might find an error. With the latter, you systematically check the ways you make mistakes and eliminate them. Chip and System-Level Verification Attention turns to verifying the higher-level system or systems once efficient validated models are available. This catches errors in the system-level specification, as well as errors in the top-level (TL) schematics and any inter-block inconsistencies.

The Verilog-AMS from MiM is perfect for mixed-level performance analysis of our analog system. The SystemVerilog models are perfect for our chip-level verification.

Lead verification engineer of a power management group

Hand-written testbenches are written to check the system-level functionality. These simulations are efficient because all transistor-level schematics have been replaced by models. It is possible to swap one or two models out and replace them with their schematics. This process is referred to as mixed-level simulation and can help you understand how effects that are not included in the model affect the overall system. This results in slow simulations and so is done sparingly. System-level verification can be performed at the analog sub-system level or the chip level. Of course, when performing chip-level verification, you will want models that are very fast and compatible with your simulator.

What’s Needed for Chip-Level Verification and Catching Bugs

Analog Verification Engineers An analog verification team must be staffed with dedicated analog verification engineers. These engineers need not stay verification engineers from one project to the next, but for the duration of the project they should dedicate all their time to verification. Otherwise, the verification is often not prioritized properly. Attempting to give engineers design and verification responsibilities at the same time is a reliable leading indicator of failure for a verification effort. As a side benefit of having dedicated analog verification engineers, you now have more than one person focused on understanding and checking the functionality of each block, and the analog system as a whole, which greatly increases the likelihood of errors being caught early.

Automation Even with experienced analog verification engineers, verification is still be too expensive without substantial use of automation. And just scripting the running of tests is not sufficient. It is also necessary to extend the automation to include the creation and maintenance of the models and the testbenches. In addition, all testbenches should be completely autonomous (self checking). You should also script your regression tests. All of these forms of automation are required for the verification process to be efficient. Without automation, the testing is time-consuming and tedious. As a result, engineers avoid or minimize the time spent on verification, which then becomes quite superficial. With automation, the tests become more plentiful and systematic. Furthermore, once all the tests have been automated, it becomes possible to check out the final version of the design just before tape-out and quickly rerun all the tests to assure that no last-minute mistakes were made. |

|||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||